Alexa Blogs

In honor of the Alexa Skills Challenge: Games, we're excited to share a new Twitch series on how to build an Alexa game skill. Together with my colleague Jamie Grossman, we streamed six episodes, during which we covered how to build an engaging voice game experience, from ideation to implementation.

If you weren't able to tune into the series on Twitch, here's a recap of each episode along with the best practices we covered. We hope you can use these insights to build your own Alexa game skill.

Episodes 1 + 2: Brainstorming & Voice Design Best Practices

The first session was dedicated to brainstorming a game idea. In order to brainstorm an idea for a voice game, we first needed to recap what works and what doesn't work when it comes to build great voice experiences. In this episode, we did just that. We looked at what aspects of game design work well with voice and which ones don't. For example, in the table below you see some factors that influenced our voice-design decisions.

| Good Voice Design Decisions | Voice Design Decisions to Avoid |

| Turn-based/asynchronous interactions | Real-time interactions |

| Short/concise inputs | Long-form complex inputs |

| Deterministic inputs (yes/no/closed list) | Non-deterministic inputs |

| Optional/progressive enhancements | Complex settings/visuals |

| Puzzle/RTS/questions/logic/stories | Movement/complex time-based interactions |

You might look at some of the items in the left column and wonder why they aren't recommended. Those are mechanics that are harder to implement effectively given how voice works and how we interact with Alexa-enabled devices. For example, any input needs to be processed and sent back to the skill back end, and we need to listen to the response before we can take a subsequent action. Therefore, things like real-time interactions become tricky as several parts of the communication chain can incur bottlenecks or delays for whatever reason (such as a slow API call or a user that doesn't answer in time).

We also wanted to keep the inputs relatively simple, mainly to keep the back-end logic manageable. If we were to have long, non-deterministic inputs, it becomes harder to solely rely on the automatic speech recognition (ASR) and natural language understanding (NLU) engines of Alexa, as some of those semantics will need to be parsed and processed on our own back end.

The beauty of Twitch, is that viewers can actively participate in your creative process. During the episode we leveraged our Twitch audience and brainstormed some ideas with the viewers. After a good amount of back and forth, the end result was an idea for a game called Memory Market. Essentially a memory game, where the user is auditioning to be Alexa’s helper, and the main test is to help remember customer’s shopping lists. Therefore, Alexa asks increasingly difficult things to remember and different aisles of the supermarket represent more and more difficult levels.

Watch the full episode here:

Episodes 3 + 4: Planning & Prototyping the Game Skill

With the idea we created in the first two episodes as our north star, we heeded the age-old saying: weeks of programming can save you hours of planning. We therefore dedicated almost the entire episode to planning out as much as possible exactly what we would need for our game. We mapped out the level progression, point system, question structure, inputs, some of the preliminary copy as well as some code-design decisions: how to structure the attributes/states of our game, and, most importantly, what intents, slots and slot types we would need. You can find the recording here.

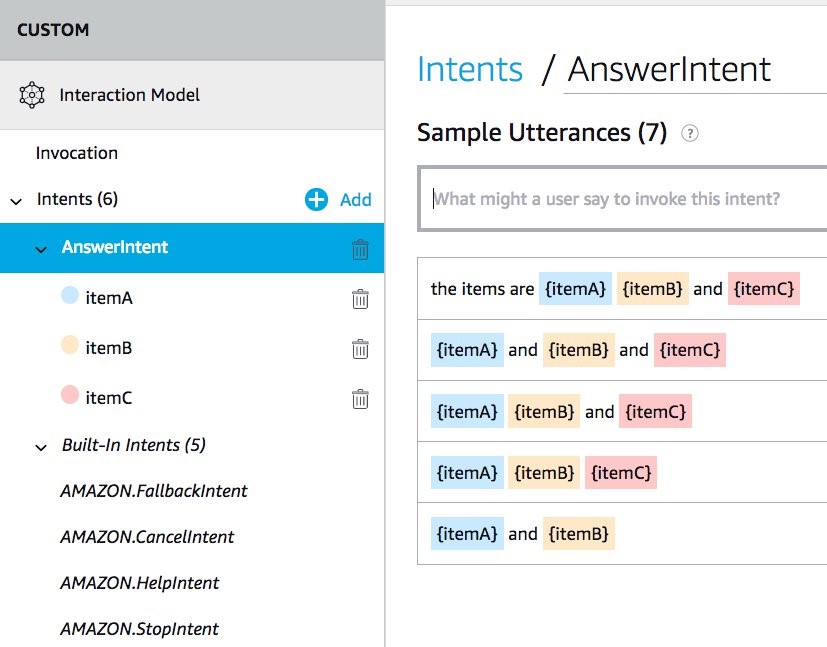

In order to truly understand what sort of interaction model we would be building, we started by mocking the interaction between us continuously incorporating suggestions from the viewers of the stream. Once we got a back and forth interaction that satisfied us and the viewers, we proceeded to dissect it and pick out what intents we would need. We concluded really only one main AnswerIntent was needed as we would manage the state in the back end.

Then we thought of how the user inputs (i.e. the answer utterances of the AnswerIntent) would work. If you remember from the blog post on principles for building good voice games, we discussed how some games leverage a simple input mechanic (such as Yes Sire where the user only says Yes or No). By designing your game so that the input is simple, you drastically reduce the cognitive load of formulating an answer for the user, and simplify the parsing of the answer on the backend. In our example, we ended up decided that any answer could have one, two or three food items, and consequently any question that we asked to the user, always clearly asked for one. The points will be calculated according to how many items are recalled (up to three).

Here's what the interaction model and AnswerIntent looks like:

We concluded the session by quickly sketching out the back-end code, using our favorite AWS Lambda scaffolding tool: the code generator. One thing to remember about using this tool is that it generates a lot of code. Make sure you are familiar with some of the basic templates to understand what is a helper function and what is a core part of the Alexa Skills Kit (ASK) Software Development Kit (SDK) for NodeJS.

Watch the full episode here:

Episodes 5 + 6: Implementation

In the final episodes, we went more into the meaty back-end development. Due to time constraints, some of the work was done offline in order to focus on the key concepts during the stream, such as Amazon Polly integration, localization, state management, session attributes, persistence. You can find the recording here.

We recently announced that you can now use SSML to use Amazon Polly voices. This feature wasn't available when this episode aired, but we ended up writing an Alexa-to-Polly integration layer with built-in S3 caching. This has been packaged as an NPM module if you still want to use it, which you can find here. Keep in mind we recommend you use SSML now that it's available to integrate Amazon Polly voices, unless you are trying to use languages currently not supported.

Another aspect we covered during the stream is localization, and how to incorporate several languages powered by the same underlying AWS Lambda function. You can see exactly in what this consists of by checking out my previous blog post on how to localize your Alexa skills.

Last, we covered topics such as score keeping, maintaining state, session attributes, and data persistence. If you weren't able to make the stream, you can check out how to incorporate all of the above by following some of our existing tutorials found on our GitHub repository such as the high-low game and the trivia templates.

Watch the full episode here:

Join Us Every Week on Twitch

If you have questions about this Twitch series or want to share your own game skill ideas with us, please reach out to @muttonia and @jamielliottg on Twitter.

You can find the Alexa team on Twitch every week at twitch.tv/amazonalexa. If you would like to get notifications every time we stream, make sure to follow our channel by clicking the purple heart icon. You can also tune into Twitch every Tuesday at 1 pm PT for Alexa Office Hours. During these weekly one-hour sessions, a rotating cast of Alexa evangelists are available to answer your skill-building questions.

Related Content

- Alexa for Gaming

- Best Practices for Building Voice-First Games for Alexa

- Build an Alexa Quiz Game

- Build an Alexa Trivia Game

- Tips on State Management at Different Levels

Build Skills, Earn Developer Perks

Bring your big idea to life with Alexa and earn perks through our milestone-based developer promotion. US developers, publish your first Alexa skill and earn a custom Alexa developer t-shirt. Publish a skill for Alexa-enabled devices with screens and earn an Echo Spot. Publish a skill using the Gadgets Skill API and earn a 2-pack of Echo Buttons. If you're not in the US, check out our promotions in Canada, the UK, Germany, Japan, France, Australia, and India. Learn more about our promotion and start building today.