Alexa Blogs

Alexa offers your customers a new way to interface with technology – a convenient UI that enables them to plan their day, stream media, and access news and information. If you’re planning on building a device with the Alexa Voice Service (AVS), you’ll want to ensure you have the right amount of central processing unit (CPU) power, memory, and flash storage to ensure your product brings a delightful hands-free Alexa experience to your customers.

In this blog post, we provide examples of existing AVS device solutions that can be used as a guide for sizing up CPU, memory, and storage for a headless voice-forward device with microphone(s) and speaker(s). Please note this blog does not cover CPU or memory requirements for screen-based devices, tap-to-talk Alexa implementations, smart home use cases or Alexa Calling and Messaging.

Sizing Up CPU

Sizing an embedded system processor is a combination of science and art. A common but outdated convention is to use Dhrystone MIPS (Million Instructions Per Second), or DMIPS, as a measure of processor performance relative to the 1970-era DEC VAX 11/780 minicomputer. DMIPS are generally reported as DMIPS/MHz for the typical MIPS of a processor at a given MHz. The Dhrystone benchmark suffers from several shortcomings, as performance metrics can vary considerably for the same hardware using different compilers, compiler optimization settings that optimize away large portions of the test code, and wait-state delays for reading from memory. Other benchmarks suffer similarly. At the end of the day, your real-world application is the final judge of actual performance.

CPU and Memory Work Together

An Alexa client application requires host processor cycles for tasks such as wake word detection, data compression and decompression. It also requires memory to buffer outbound and inbound audio streams for Text-To-Speech (TTS) and music playback. Compiler options for code size optimization and techniques for code compaction to generate smaller executables that more easily fit in limited amounts of memory on embedded systems are commonly used to keep costs to a minimum. These constraints impose a need for considering both CPU and memory when developing and optimizing embedded systems software.

Programming styles on large versus small computer systems can also vary and affect required processing power and memory. The adage that compute cycles are cheaper than human programming cycles as it generally applies to large computer systems does not necessarily translate well to small embedded systems. While it’s true that System on Chip (SoC) or System On Module (SOM) capabilities continue to increase and lower in cost, competitive markets and tight margins impose a need for close scrutiny of the overall cost of the system, especially when millions are being produced. Techniques such as code profiling help isolate portions of programs that utilize more CPU or memory. Focusing optimization in these areas is a first step in reducing the overhead of software components and ultimately enable a lower cost hardware solution.

Headroom, Not Overhead

Software component overhead is often reported in DMIPS, which we’ve already seen can be problematic. Generally, overhead is calculated by the sum of all components believed to contribute. The challenge lies in knowing all of the contributors. Another challenge is knowing whether other components running on the system, some periodic, were also considered. Software involving complex and unpredictable interactions make this even more challenging.

Headroom provides a measurement of what’s left, given all else. Measuring the headroom of unused processor cycles, memory, and storage accounts for all components running on the system. Also, by starting with the AVS Device SDK sample application (SampleApp) and measuring the associated headroom, you’ll be able to determine how much is left for your application’s features and customizations. Available tools on Linux for measuring CPU, memory, and storage include top, df, and inspection of /proc/meminfo. Be sure to subtract out the CPU and memory usage of top itself when using it.

Sizing It All Up

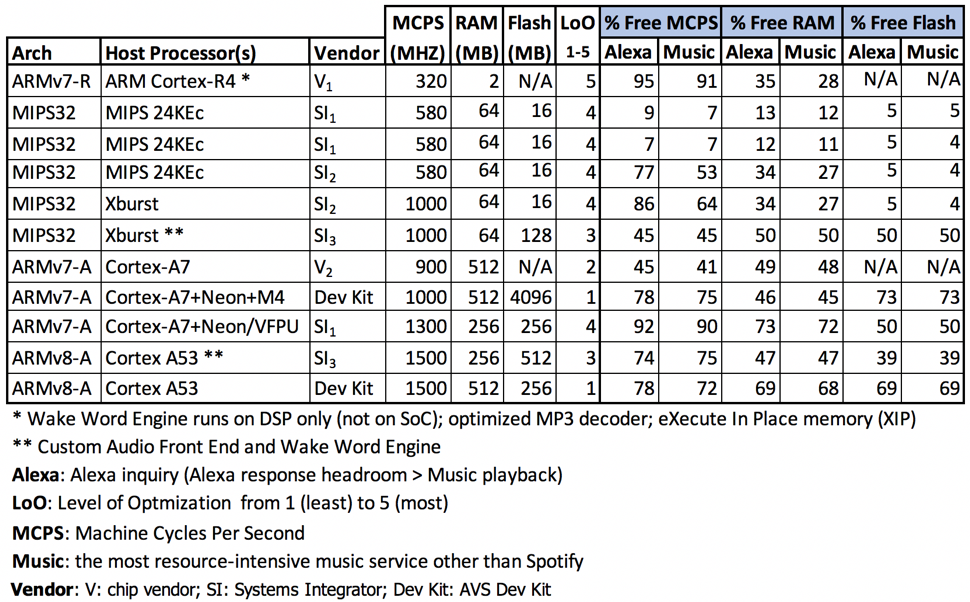

The amount of CPU, memory, and storage can vary substantially for different processor architectures and operating systems. Optimization techniques play a huge role in reducing required system resource capacity. Table 1 below shows examples of processor, memory, and flash storage headroom values in Alexa applications for Alexa conversation (voice responses, Flash Briefings, weather) and streaming media use cases. The vendor column indicates whether the device originates with a processor vendor (V), AVS Systems Integrator (SI), or an AVS Development Kit (Dev Kit).

Table 1- Headroom on Alexa Built-in Devices

Each solution has varying degrees of optimization as illustrated in the Level of Optimization (LoO) column. It also shows an example of a very optimized solution on a considerably less powerful ARM Cortex-R4 microcontroller for illustration purposes, but where the Alexa wake word engine runs exclusively on the digital signal processor front-end of the device to offload the host processor.

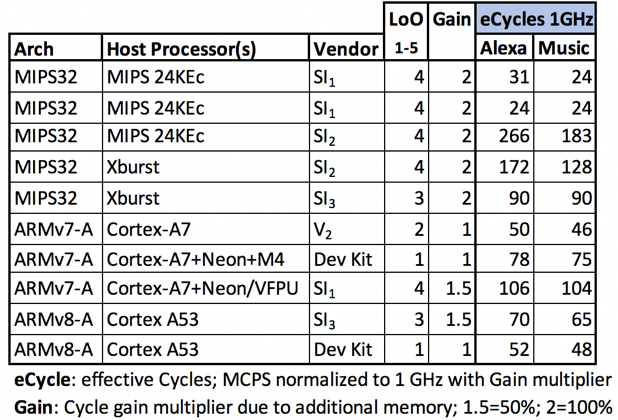

Table 2 and Figure 1 below illustrate how effectively the various solutions utilize the host processor and memory.

Table 2- Normalized Headroom on Alexa Built-in Devices

The data for each of the processors were normalized to a common frequency of 1GHz MCPS and 512MB RAM for comparison. A gain multiplier was introduced to factor in a hypothetical gain that could be achieved by exchanging machine cycles for larger RAM utilization. The low-end ARM Cortex-R4 was left out to limit the comparison to solutions that implement the Alexa wake word on the host processor.

Table 3 - Effective Cycle Usage of Alexa Built-in Devices

The data demonstrates that solutions provided by AVS Systems Integrators generally make the most effective use of system resources on the device, thereby enabling a lower cost solution and lowering mass production costs. Note that the data may vary over time and are only a snapshot of present Systems Integrators performance. Working with Systems Integrators also provides the benfits of acoustic expertise, Audio Front-End (AFE), pre-validation and manufacturing testing support, OTA updates for new features and security, as well as peace of mind know you’ll have a device that passes the high-quality bar.

If you plan on rolling out your own device with Alexa built-in and have the needed inhouse expertise and resources, please review the provided LoO data to determine the solution architecture that best fits your product’s needs and the capabilities of your developer resources. Be sure to leave a 20%+ margin in available CPU after considering the Alexa client and your product’s software to incorporate future Alexa features and functionality. For Cortex-A7 and more powerful processors, be sure to use at least 512 MB of flash for more robust Over-The-Air (OTA) updates as the extra storage will allow for an active and inactive set of partitions with safe fallback in the event of an incomplete update.

Cost and the need for low-power voice-forward consumer devices are the driving forces for using lower-powered processors and less memory. However, this must be balanced with time-to-market and a capability for supporting new Alexa features, especially for the initial first release of a novel product. Users have an expectation for feature parity across voice-forward devices. The good news is that working with Systems Integrators can get your product to market faster and lower the overall cost with a more effective solution.

New to AVS?

AVS makes it easy to integrate Alexa directly into your products and bring voice-forward experiences to customers. Through AVS, you can add a new natural user interface to your products and offer your customers access to a growing number of Alexa features, smart home integrations, and skills. Get started with AVS.