Alexa Blogs

Editor's Note: For an updated version of the Smart Home Skill API Validation Package, please click here.

When building skills with the Smart Home Skill API, you may have found it challenging to test and validate your skill's Lambda responses. Unlike custom skills with which you can use the Service Simulator in the developer console, skills built for the Smart Home Skill API can only be tested with a live Alexa-enabled device, such as an Echo, or with the online EchoSim simulator. And if your response does not conform to the Smart Home Skill API reference, you would only hear a generic error response from Alexa.

Not only did it make testing smart home skills difficult, it also would have contributed to runtime failures that were invisible to skill developers. To help ease these pain points, we’ve built the Alexa Smart Home Skill API Validation Package, available now to all smart home skill developers.

Note that this blog post assumes that you are familiar with developing skills based on the Smart Home Skill API, and that ideally, you have a smart home skill published. If not, please visit the following guides to learn more about smart home skills before proceeding.

- Understanding the Smart Home Skill API

- Steps to create a smart home skill

- Smart Home Skill API reference

How to Get Started

If you’d like to dive right in, feel free to visit our open-source GitHub repo to get started.

The README provides instructions on how to use the validation package, so please read it carefully. Then clone the repo and add the validation code to your Lambda function. The validation package is currently only available for Node.js and Python. You are welcomed to contribute to the repo if you use Java or C# and are interested in porting over our code to those languages.

Why This Tool Is Valuable

A typical, simplified smart home skill Lambda handler written in Python looks like this:

def lambda_handler(event,context): response = handleEvent(event) return response

handleEvent() is another function that does all the work to handle the incoming discovery or control directive from the Alexa Smart Home trigger. It returns a response JSON, which you return from your Lambda function back to Alexa. If your Lambda does not time out, or if you don’t encounter any downstream errors, you would be able to generate the response JSON, return it, and your Lambda function completes successfully.

But what if the response JSON you generated was malformed? Your Lambda function would have still sent it back successfully, but it would have failed validation on the Alexa side, resulting in an error message to the Alexa user. Unfortunately, you would have had no idea that your response JSON resulted in an error, because the error never gets back to you.

The validation package we built is to help with this disconnect. With this simple example, you would include the validation package at the top of your Lambda function with:

from validation import validateResponse, validateContext

Then, right before you return the response JSON, you validate it within a try-catch block:

def lambda_handler(event,context):

response = handleEvent(event)

try:

validateResponse(response)

except ValueError as error:

# log the validation error

return response

This allows you to see in your logs the validation error that would’ve have been caught on the Alexa side. Although you still return a malformed JSON to Alexa, at least you now know that something is wrong, and have the data to diagnose and fix.

Please see the README in the repo for the node.js example, as well as details on validateContext().

Now that you have a high-level understanding of what we’re trying to do, here are a few real examples where the validation package would’ve proved useful.

Malformed Error Message

The UnwillingToSetValueError has the following structure:

{

"header":{

"namespace":"Alexa.ConnectedHome.Control",

"name":"UnwillingToSetValueError",

"payloadVersion":"2",

"messageId":"917314cd-ca00-49ca-b75e-d6f65ac43503"

},

"payload":{

"errorInfo":{

"code":"ThermostatIsOff",

"description":"Thermostat is off."

}

}

}

However, we’ve seen a few cases where developers forget the “errorInfo” property, and instead, write the payload as:

"payload":{

"code":"ThermostatIsOff",

"description":"Thermostat is off."

}

At first glance, the JSON does not appear to be malformed. And since this is an error message, the expected response text-to-speech (TTS) from Alexa would be that of an error. Unfortunately, this message would fail validation on the Alexa side, and the response TTS would be a similar error response. As such, this is a mistake that is easy to make and hard to debug. With the validation package, this would have been quickly caught in testing, and would never have made it to production.

Runtime Errors with Discovery Responses

Another common error we notice are DiscoverAppliancesReponses that fail validation on the Alexa side. As you know, the DiscoverAppliancesResponse must include a list of discoveredAppliances. And each discoveredAppliance has a number of required fields, such as modelName or friendlyDescription.

A skill may work perfectly with an existing set of devices, but in the future when a new device is introduced to customers, one of the fields may be empty for that device. For example, the modelName may be missing because of some bug from a dependent service. When that happens at runtime, you won’t notice anything, but the DiscoverAppliancesResponse will fail validation on the Alexa side and result in a broken customer experience. With the validator package, you’d see a spike in errors from your Lambda, and would know exactly what type of device was causing the problem.

Operational Excellence

At Amazon, operational excellence is one of our top priorities, and this is especially important with smart home services. Customers let us know loud and clear, through skill reviews and social media, when smart home skills fail. The Works with Amazon Alexa (WWAA) program also has operational excellence as a core requirement, and skills and devices with poor quality of service are at risk of losing their WWAA badge and certification. Therefore, it is in both our interests to maintain a high standard of operational excellence. The use of this validator package will aid in that effort, and will provide you with information that can help you find and resolve errors quickly before they affect customers.

How to Use the Validation Package

Setup

Please follow the instructions in the README file in the validation package repository to clone the repo, add the validation package file to your Lambda code, and add a few lines to your main Lambda handler. If your Lambda is structured differently, for example, you might have multiple points in your handlers where you return responses to Alexa, then add the call to the validateResponse() function wherever you respond back to Alexa to ensure that you validate all responses.

The validation package code is also fairly straightforward. It’s basically a series of if/then statements that check your response JSON against the Smart Home Skill API specs.

At a high level, the validation package provides two validation functions: validateContext() and validateResponse().

validateContext() Example

validateContext() is used only to check whether your Lambda function timeout is set to 7 seconds or less. This is to ensure that your Lambda times out and errors before Alexa times out (8 seconds), allowing you to see the timeout error. Otherwise, you could take > 8 seconds to respond, and even though you’ll think you have responded properly and without error, Alexa will actually time out, resulting in an error to the user.

Note, however, that for skills that have implemented the lock APIs, you should set this check to 60 seconds or more, or choose to not include this validation. This is because lock responses may take up to 60 seconds.

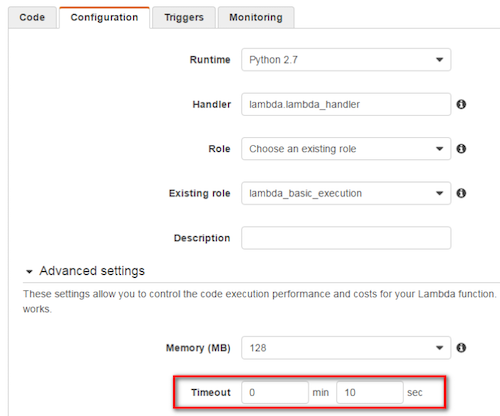

Your Lambda function timeout setting can be found on the AWS Console > Lambda > your Lambda function > Configuration tab > Advanced Settings:

In this example, the Lambda timeout is set to 10 seconds.

If your Lambda handler includes a call to validateContext(), then the next time your Lambda receives any request from Alexa, you will see something like the following exception in your CloudWatch logs:

[ERROR] 2017-04-19T07:56:28.955Z b1d54ede-24d5-11e7-a518-d741d3d846ef Lambda :: timeout must be 7 seconds or less: <__main__.LambdaContext object at 0x7f7754f63e10>

With this information, you can adjust your Lambda function timeout accordingly via the AWS Console (pictured above), or the AWS CLI, e.g.:

$ aws lambda update-function-configuration --function-name --timeout 7

validateResponse() Examples

The bulk of the validator package focuses on validating your responses including discovery, control, query, and error messages. To illustrate this, let’s use the two real examples discussed earlier in this post as well as another example for Control messages. We’ll demonstrate what you should see in your logs after you’ve implemented the validation package.

Malformed Error Message

Suppose you made the mistake of forgetting the “errorInfo” structure in your UnwillingToSetValueError message, and your response JSON is like this:

{

"header":{

"namespace":"Alexa.ConnectedHome.Control",

"name":"UnwillingToSetValueError",

"payloadVersion":"2",

"messageId":"917314cd-ca00-49ca-b75e-d6f65ac43503"

},

"payload":{

"code":"ThermostatIsOff",

"description":"Thermostat is off."

}

}

When validateResponse() runs, you should see the following exception in your CloudWatch logs:

ValueError: UnwillingToSetValueError :: payload.errorInfo is missing: {'code': 'ThermostatIsOff', 'description': Thermostat is off.'}

This exception clearly tells you that payload.errorInfo is missing, then shows you exactly what was in payload by printing out the payload JSON object in the exception message.

Runtime Errors with Discovery Responses

Suppose one of your devices suddenly started reporting an empty modelName. As such, one of the discoveredAppliances in your DiscoverAppliancesResponse may look like this:

{

'applianceId': 'Switch-001',

'manufacturerName': SAMPLE_MANUFACTURER,

'modelName': '',

'version': '1',

'friendlyName': 'Switch',

'friendlyDescription': 'On/off switch that is functional and reachable',

'isReachable': True,

'actions': [

'turnOn',

'turnOff',

],

'additionalApplianceDetails': {}

},

Notice that modelName is an empty string. Running this response through validateResponse() results in the following exception in your CloudWatch logs:

DiscoverAppliancesResponse :: modelName must not be empty: {'friendlyDescription': 'On/off switch that is functional and reachable', 'modelName': '', 'additionalApplianceDetails': {}, 'version': '1', 'manufacturerName': 'Sample Manufacturer', 'friendlyName': 'Switch', 'actions': ['turnOn', 'turnOff'], 'applianceId': 'Switch-001', 'isReachable': True}

As you can see, the exception tells you that modelName must not be empty, and also shows you which discoveredAppliance is missing the modelName. You can use that information to further troubleshoot and find the root cause.

Incorrect Value in GetTargetTemperatureResponse

Suppose you were in a hurry. And when coding the GetTargetTemperatureResponse for your Lambda that handles thermostat query requests, you accidentally wrapped a variable for current_mode in quotes instead of expecting the variable to resolve to something like ‘AUTO’ or ‘HEAT’ depending on the current mode of the thermostat:

payload = {

'applianceResponseTimestamp': getUTCTimestamp(),

'temperatureMode': {

'value': 'current_mode',

'friendlyName': '',

}

}

When validateResponse() runs, you’ll see this error in your CloudWatch logs:

GetTargetTemperatureResponse :: payload.temperatureMode.value is invalid: {'coolingTargetTemperature': {'value': 23.0}, 'heatingTargetTemperature': {'value': 19.0}, 'temperatureMode': {'friendlyName': '', 'value': 'current_mode'}, 'applianceResponseTimestamp': '2017-04-24T04:09:46Z'}: ValueError

This exception tells you that payload.temperatureMode.value is invalid, and you can see that value = ‘current_mode’, which is not what you are expecting.

Monitoring CloudWatch

Once you’ve added the validation package to your Lambda handler, we recommend you set up CloudWatch alarms to monitor your logs and metrics. The validation package throws exceptions, which contribute to the Errors metric for Lambda in CloudWatch:

You can create alarms based on the Errors metric so that if the validation package starts catching runtime errors and exceeds the alarm thresholds you’ve set, then you get notified. To begin, we suggest that you set the threshold very low so you can catch more errors. If you find that you are receiving many notifications, then it’s an indication that your skill is impacting your customers significantly, and you should debug immediately.

You can create alarms based on the Errors metric so that if the validation package starts catching runtime errors and exceeds the alarm thresholds you’ve set, then you get notified. To begin, we suggest that you set the threshold very low so you can catch more errors. If you find that you are receiving many notifications, then it’s an indication that your skill is impacting your customers significantly, and you should debug immediately.

For more information on how to set up alarms for CloudWatch, see our documentation on Creating Amazon CloudWatch alarms.

Send Us Your Questions and Feedback

The Alexa Smart Home Skill API Validation Package can help you raise and maintain your skill quality. This new tool reveals validation errors to you during development, testing, and production runtime that would’ve otherwise only been caught on the Alexa side.

We are committed to keeping the validation package updated every time the Smart Home Skill API is updated, and we hope that you’ll find it useful as you develop your smart home skill.

If you have any questions or feedback, or if you notice errors in the validation package, please let us know in the Issues section of our GitHub repo. And please feel free to help us port the validation package to other Lambda-supported languages such as Java and C#. Please submit pull requests as needed.

Build a Skill, Get a Shirt

The Alexa Skills Kit (ASK) enables developers to build capabilities, called skills, for Alexa. ASK is a collection of self-service APIs, documentation, tools, and code samples that make it fast and easy for anyone to add skills to Alexa.

Developers have built more than 10,000 skills with ASK. Explore the stories behind some of these innovations, then start building your own skill. Once you publish your skill, mark the occasion with a free, limited-edition Alexa dev shirt. Quantities are limited.