Alexa Blogs

One of the promises of a voice-first user experience is that it’s natural. People can say what they think and the user interface can understand them, parsing their meaning or intent, rather than simply understanding their words. In this case, technology bends to our way of thinking and acting, rather than making us learn how to use it. Alexa handles automatic speech recognition (ASR) and natural language understanding (NLU). (You can read about new research and advancements presented by the Alexa Science team at Interspeech 2018 here).

But creating conversational experiences won’t be solved by Amazon alone. It’s a tough and important challenge and it’ll take the developer community to solve it. Our vision is for Alexa to be able to help you with whatever you need. To make that a reality, we need help from third-party developers who are coming up with engaging voice experiences every day. Alexa skills and the developers that build them are incredibly important to that vision.

A recent discussion with industry vets and voice-first enthusiasts inspired me to write this post on the Alexa technologies that help people find engaging skills. This includes the technologies that support finding, launching, and re-engaging with skills in natural ways and how you can use them to make your skill more discoverable.

Making Alexa More Friction-Free and Skills More Discoverable

You can use any Alexa skill by saying the skill’s invocation name, and then following up with additional utterances. For example, you might say, “Alexa, open Tide Stain Remover” and then ask for advice on an ink stain. Of course, that’s not how people actually talk. We don’t use the same exact phrases day in and day out; we like variety, we forget the words, we explore new things, and we want to skip the steps.

You shouldn’t have to remember the name of a skill or think of the proper phrasing to use it. Instead you might say, “Alexa, remove an ink stain.” You are trying to accomplish two things. First, you want to launch the Tide Stain Remover skill and you want to go straight to the ink stain features of the skill. Really, what you want is for Alexa to immediately walk you through stain mitigation steps (probably urgently; does the 10-second rule stand for stains?). So, for a customer in that moment, saying “Alexa, remove an ink stain” provides a voice shortcut, linking deep into the best skill for the task. It lets you use the skill even if you forgot the name, don’t know that skill exists, or just want to say fewer words.

Finding the Right Skill Easily with Name-Free Interaction

Millions of customers use skills every month without using the invocation name. If a customer requests something broad like, “Alexa, let’s play a game,” Alexa will pick a skill or suggest a few that are well-suited for the request. We test and rotate the suggestions to see which skills customers are responding to and give more developers visibility. When customers use your skill (either through these broad utterances or any other invocation channel), the back end powering this system gathers metrics on the aggregate usage of skills and on the preferences of each individual customer. As customers re-engage with your skill, this will improve the likelihood of it being discovered by others. For a select (and growing) set of utterances, we also provide a rich voice-first conversational discovery experience, which will allow customers to discover your skill based on its capabilities.

Surfacing the Most Relevant Skills via Shortlister and HypRank

It’s important that customers are able to easily reengage with their skills – even if they describe what they want instead of calling the skill by name. Let’s look again at the phrase “Alexa, remove an ink stain” where we have an utterance, “remove a {stain type} stain,” and a slot value, stain type of “ink,” that can be mapped to a skill’s interaction model. To make this work, two techniques are employed to deliver the right experience. The first is a neural model architecture we call Shortlister, which solves a domain classification problem to find the most statistically significant (k-best) matches of candidate skills (details in a recent blog and research paper). The goal of Shortlister is to efficiently identify as many relevant skills as possible – it’s high volume. The second is a hypothesis reranking network, we call HypRank. It uses contextual signals to select the most relevant skills – it’s high precision (details in a recent blog and research paper). When combined, these two help classify which skill can provide the most likely correct response from an invocation name-free utterance. Beyond helping people reengage with the skills they’ve manually or automatically enabled, the Shortlister and HypRank surface specific and relevant skills to people that have never used them.

Encouraging Skill Use with Voice-First Recommendations

You may have noticed that on detail pages for products on Amazon.com, we include a widget titled “Customers who bought this item also bought” that provides recommendations for similar products. In the Alexa App and the Alexa Skills Store, we do the same for skills. Likewise, Alexa does this via voice. When customers have finished engaging with a skill, they may receive a recommendation from Alexa for other skills they may enjoy. For example, Alexa might say, "Because you used <skill1 name>, Amazon recommends <skill2 name>. Would you like to try it?" If they say yes, they can try it out. To optimize the experience for customers and to expose a wide range of skills, we experiment with which skills to recommend and how frequently. So far, we’re seeing that a lot of people are getting to experience new skills this way and this is improving discovery for skills overall.

Removing Enablement Friction

Enabling a skill is similar to downloading an app. Customers can enable skills in the Alexa App, from amazon.com/skills, or simply via voice by saying, “Alexa, enable [the skill].” Because skills live in the cloud, rather than being downloaded to a device, we find that customers want simple, frictionless access. We now automatically enable a list of thousands of the most popular skills – and we’re constantly adding more. Auto-enablement means that you can use a skill immediately when you say, “Alexa, open [the skill].” Some of the criteria we use to choose auto-enabled skills include customer satisfaction, such as number of customers, customer engagement, and ratings, as mentioned above. When a customer invokes one of these skills, whether through direct or name-free interaction, they can immediately engage with the skill. For other skills, including those that have a mature rating or extra steps like signing in through account linking or parental permission, customers will be directed to use alexa.amazon.com or the Alexa App to choose and configure skills to enable before launching.

Optimizing Your Skill for Better Discovery

You might wonder how you can optimize your skill experience for name-free interaction. The machine-learning model takes a lot of factors into account including the quality and usage of your skill. So start by making sure you deliver a lot of value to customers. Some of the ways that we identify high-quality skills are the number of customers, customer engagement, and ratings and reviews. Beyond ensuring you have an engaging skill, there are things you can do to give the system explicit signals, like adding accurate descriptions and keywords in the skill metadata, selecting the correct category, and implementing CanFullfillIntentRequest.

Implement CanFullfillIntentRequest (Beta)

Alexa is getting smarter every day and the Alexa skills you create play directly into that, providing a broad range of information, experiences, and games that can meet customer requests. We aim to seamlessly route customers to the best response, which is often a skill. Using the CanFulfillIntentRequest beta, your skill provides information about its ability to fulfill a given customer request at runtime, which can then respond with a few explicit signals, like if it can fulfill the intent, if it can understand the slot(s), and if it can fulfill the slot(s). Alexa combines this information with a machine-learning model to choose the right skill to use when a customer makes a request without an invocation name. As a result, customers find the right skill faster, using the search terms they say most naturally.

Boost your skill’s signal to our various systems by implementing the CanFulfillIntentRequest interface in your skill. To enable this, open your skill in the developer console. Go to the Build > Custom > Interfaces page, and enable the CanFulfillIntentRequest interface.

Then in your code, implement your response. See the quick-start guide and docs to get started. To enable HypRank, to choose the best response to the customer, it’s important to be accurate with the CanFulfillIntentRequest responses. A skill’s response should be closely aligned with what the skill can really accomplish. Appropriately saying “Maybe” or “No” is a stronger signal for HypRank than saying “Yes” and failing to deliver. A stronger signal increases the likelihood that your skill is matched to fulfill a customer’s request. To this end, the key concept that you’ll need to think through is how to respond at the intent level and for each of the slots.

Add Accurate Skill Keywords and Categories

When customers make a request, the keywords and categories that you’ve included with your skill submission help us surface appropriate skills. For example, a customer could ask for specific kinds of games: "Alexa, do you have any party games? Kids games? Sports games? {keyword} games?" To select from thousands of games available today, Alexa makes use of the metadata for relevant skills in the games category with that keyword. We use customer engagement to prioritize the skills for these experiences. We measure how customers are using skills, and depending on the customer’s request provides skill lists by popularity, recency and other measures.

Assign keywords and the proper category to your skill so that Alexa can offer your skill for broad or topical utterances.

As customers discover and use your skills through keyword browse experiences, Alexa tracks acceptance/rejection. We use this alongside other data to optimize skill discovery experiences. Ensure that your keywords and categories are chosen carefully. This is critical to connecting customers get the right skill for their request.

Improve Skill Descriptions and Other Metadata

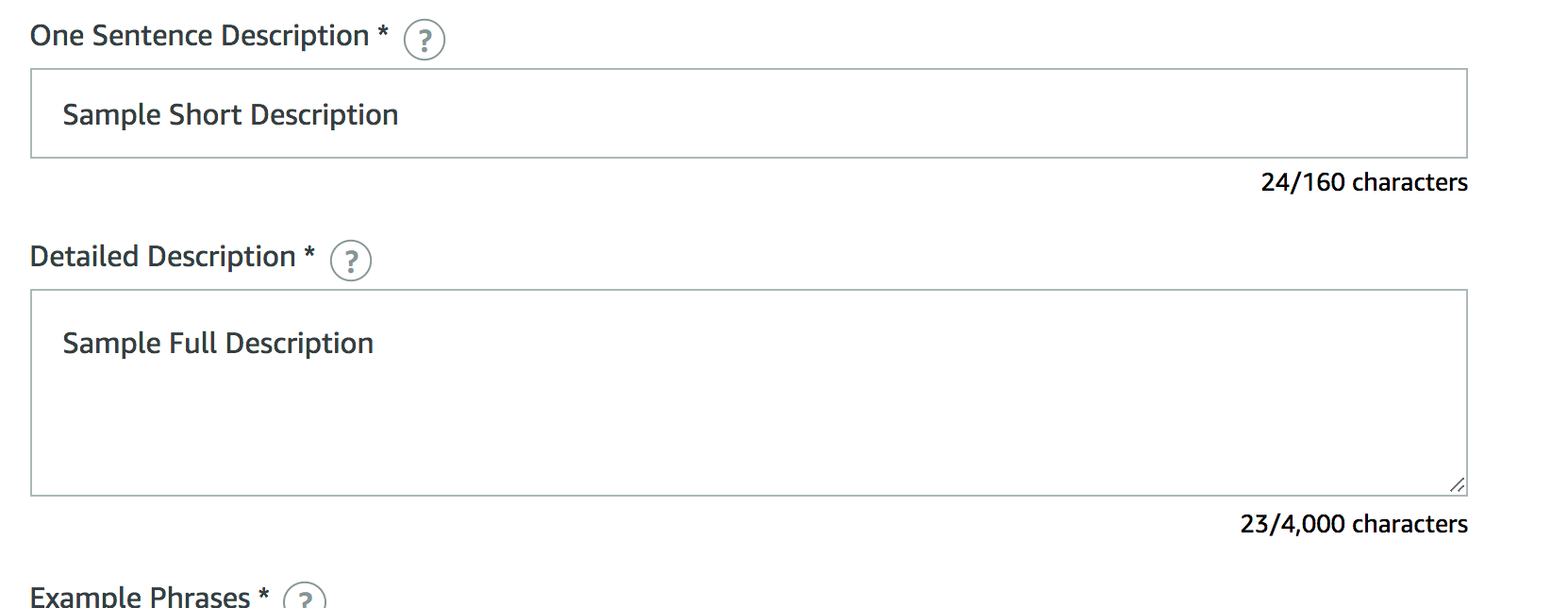

In addition to keywords and categories, we scan description fields to surface the skills that customers are asking for. Make sure that your description (and particularly your detailed description) includes the various things your skill can do. For the one-sentence description, imagine that the text being read aloud. It’s a best practice to read it out loud to a friend and make sure it sounds right. We use these descriptions in graphical UIs and when Alexa offers skill suggestions and customers want to learn more. A strong one-sentence description will improve the conversion rate for customers offered your skill.

Use both of your description fields. They serve different purposes.

Optimize for Voice Discovery: Customer Engagement Will Always Be a Core Signal for Our Systems

Let’s recap. To enable people to use your skill in a natural, name-free way, be sure to add descriptive keywords, the proper category, and great descriptions and implement CanFullfillIntentRequest. The foundation under that, always, is to deliver a high-quality product that meets or exceeds your customer’s expectations. Think deeply about things like voice design, scalability, and beta testing.

When your skills are exposed to customers through any of our name-free interaction systems or considered for promotion by Amazon, you want to be sure to deliver a great first impression. Over time we will continue to improve skill discovery and enhance our machine learning models to create the best customer experience.

Reach out to me on Twitter at @PaulCutsinger to continue the conversation.

Related Content

- Alexa at Interspeech 2018: How Interaction Histories Can Improve Speech Understanding

- The Scalable Neural Architecture behind Alexa’s Ability to Select Skills

- HypRank: How Alexa Determines What Skill Can Best Meet a Customer’s Need

- Implement CanFulfillIntentRequest for Name-free Interaction

- Guide: How to Shift from Screen-First to Voice-First Design

- Video: How Building for Voice Differs from Building for the Screen

- 7 Tips for Building Standout Skills Your Customers Will Love

Build Skills, Earn Developer Perks

Bring your big idea to life with Alexa and earn perks through our milestone-based developer promotion. US developers, publish your first Alexa skill and earn a custom Alexa developer t-shirt. Publish a skill for Alexa-enabled devices with screens and earn an Echo Spot. Publish a skill using the Gadgets Skill API and earn a 2-pack of Echo Buttons. If you're not in the US, check out our promotions in Canada, the UK, Germany, Japan, France, Australia, and India. Learn more about our promotion and start building today.