Alexa Blogs

Humans like information with high levels of entropy. Communication feels natural and fulfilling when we share more information in the same amount of data. I was reading an article that discussed multi-sensory experiences in human communication, and the article left me with an interesting stat: humans can type 40 words per minute, speak 130 words per minute, and read 250 words per minute on average. This stat explains why consumers have grown to love conversational user interfaces (UIs) and indicates that paring visual cues to voice responses makes the interaction feel more natural. If you are working on a voice-forward design today, it’s worth considering how your product will intercept this evolutionary step in conversational UIs.

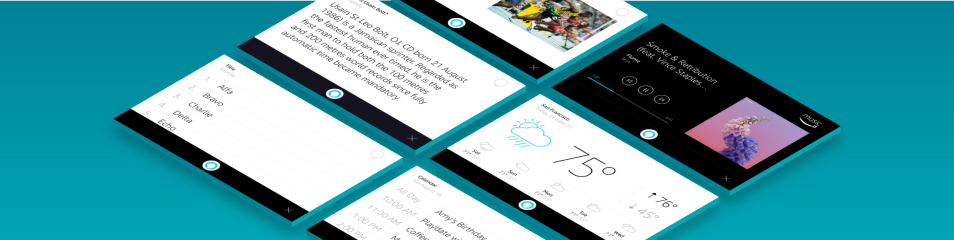

A few weeks ago, we announced Display Cards for AVS, a new capability that allows your Alexa-enabled product to show visuals to complement Alexa voice responses. This capability makes the Alexa experience on screen-based products feel more natural by giving customers multiple ways to consume information that either answers their questions or fulfills their needs.

As a developer, you may be interested to know how AVS delivers the capability in the background. I recommend starting with the updated java sample app to prototype with this feature on a Raspberry Pi. Before you begin, login to the AVS Developer Portal and navigate to your registered product. Within that window, you’ll see the “Device Capabilities” tab – click on that tab to enable the Display Cards feature for your product.

._CB519166080_.png)

(Figure 1: Device Capabilities in the Developer Portal)

As you build the prototype, you will notice a new interface in the sample app called TemplateRuntime. This is the new programming interface that delivers the visual metadata (JSON) to augment the Alexa voice response, required to render Display Cards in the Alexa-enabled product.

The TemplateRuntime interface has two main directives: RenderPlayerInfo and RenderTemplate that instruct the device software running on your Alexa-enabled product to render “Now Playing” information for music and radio, and render templates for weather, To-Do and Shopping Lists, calendar, and Alexa skills using the JSON in the directive's payload.

Let's look at an example. When you ask, “Alexa, who is Usain Bolt?”, your Alexa-enabled product uses the SpeechSynthesizer interface to send the voice request to AVS, which processes the request, and then sends a Speak directive that contains the Alexa text-to-speech (TTS) and answer. In addition to this directive, AVS also sends the JSON through the RenderTemplate directive, providing the visual metadata that will be rendered on the screen when the voice response plays back to the user. In this case, the message in the directive will look like:

{

"directive": {

"header": {

"namespace": "TemplateRuntime",

"name": "RenderTemplate",

"messageId": "{{STRING}}",

"dialogRequestId": "{{STRING}}"

},

"payload": {

"token": "{{STRING}}",

"type": "BodyTemplate2",

"title": {

"mainTitle": "Who is Usain Bolt?",

"subTitle": "Wikipedia"

},

"skillIcon": null,

"textField": "Usain St Leo Bolt, OJ, CD born 21 August 1986...",

}

}

}

If you observe the code carefully, you’ll see “BodyTemplate2” as the Display Card type. AVS offers five different Display Card templates, including Wikipedia entries, Shopping Lists and To-Do Lists, Calendar, Weather, and “Now Playing” information. See our design guidelines for tablets and TVs to learn about these templates. Adapting these render templates for “Night Mode” viewing is a personal favorite.

._CB519166872_.png)

(Figure 2: Weather Template in Night Mode)

When you are ready to develop your Alexa-enabled product using Display Cards for AVS, see our design resources to learn about common UX considerations. Factors like aspect ratio, average viewing distance, visual complexity of the screen, and use cases play an important role in determining the right templates for your integration. Once the templates are chosen, you must ensure the device software reads the JSON data, parses it appropriately, binds it to the corresponding fields in the correct template, and renders it on screen.

Start developing multi-modal UX for your Alexa-enabled product using the resources provided on the Display Cards for AVS page on the AVS developer portal. You can also visit us on the AVS Forum or Alexa GitHub to tell us what you are building and speak with one of our experts.

While Display Cards for AVS does not fulfill all the demands of multi-modal UX, it provides a step toward designing for the future. Just how Alexa is always getting smarter, Display Cards for AVS will continue to add new templates and features that your screen-based Alexa-enabled product will benefit from, bringing even more magical voice experiences to customers.