Alexa Blogs

When building voice-first experiences for Echo devices with screens, you have to consider your graphical user interface (GUI) in addition to your voice user interface (VUI) during the voice design process. To help you get started, we developed a new skill template called Berry Bash for quiz and dictionary skills for Echo Show and Echo Spot. In this post, we’ll walk you through the skill template. You can also check out the skill template in the Alexa repository on GitHub to start building.

Getting to Know the Berry Bash Skill Template

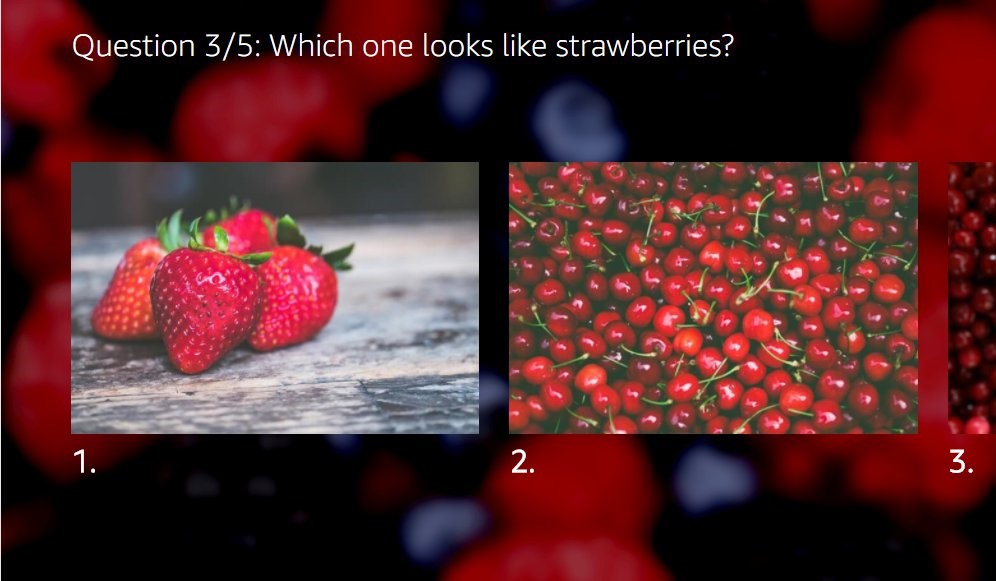

Who doesn’t love berries? Here we’ll use them as a foundation to build your next great Alexa skill. As you can imagine, the Berry Bash template is all about the tasty fruits. The first part of the sample skill provides users with information from the Berry Book via voice. It also lets users test their berry knowledge by playing a picture-based quiz in the form of Berry Buzz.

This skill sample comes prepacked with a complete interaction model, backend code, and full tutorial; it can be set up normally or via CLI with SMAPI. You can use this sample to practice building quiz and dictionary skills, or just swap out the hard-coded data for your own data set. We've made it easy by placing the data right at the top of the code here:

If you've already seen the city guide sample, you’re probably familiar with the concept. Also, you don't need to hard code this data; you can always use an external API to pull in data as discussed here.

As mentioned above, you just need to change some of the core variables related to the skill, such as the skill name and topic, and then provide some images and information related to your chosen category. For example, if you want to make the skill about planets, modify the topicData array with planet names, information, and images that you own. Keep these requirements in mind when choosing where to host your images. If you choose to host images via Amazon S3, make sure you make the image publicly accessible.

Using the Render Template for Echo Spot Display

When developing Alexa skills, the experience is always voice-first. However, now with Echo Show and Echo Spot, you can create richer experiences that display supportive content during a customer’s session with your skill. The render template makes it easy for your skill’s visual elements to automatically render to different screen sizes, including the circular screen on Echo Spot. As mentioned in our blog post about making the most of devices that support display, you can also cater responses based on the device the skill is being used on. The Berry Bash template supports this functionality, so you don't have to write the code yourself.

//Generic function

function supportsDisplay() {

var hasDisplay =

this.event.context &&

this.event.context.System &&

this.event.context.System.device &&

this.event.context.System.device.supportedInterfaces &&

this.event.context.System.device.supportedInterfaces.Display

return hasDisplay;

}

//In use in the Berry Bash Sample

if (supportsDisplay.call(this)) //Render a template if supported

bodyTemplateMaker.call(this, 2, gameoverImage, cardTitle, '<b><font size="7">' + correctAnswersVal + ' / ' + pArray.length + ' correct.</font></b>', '<br/>' + speechOutput2, speechOutput, null, "tell me about berries", mainImgBlurBG);

else //Only use voice output

{

this.response.speak(speechOutput);

this.response.shouldEndSession(null);

this.attributes['lastOutputResponse'] = speechOutput;

this.emit(':responseReady');

}

You'll find a lot of useful functions in the Alexa Node.js SDK documentation that will allow you to quickly build different render templates. However, you can find bodyTemplateMaker (shown above) in the sample. If you find yourself creating multiple render templates in your skill, this function (or a similar function that takes in arguments) can help you expedite the work.

//This function will not work by itself; you'll need to pull the

//bodyTemplateTypePicker function into your code as well from the

//sample and any other dependencies

const makePlainText = Alexa.utils.TextUtils.makePlainText;

const makeImage = Alexa.utils.ImageUtils.makeImage;

const makeRichText = Alexa.utils.TextUtils.makeRichText;

function bodyTemplateMaker(pBodyTemplateType, pImg, pTitle, pText1, pText2, pOutputSpeech, pReprompt, pHint, pBackgroundIMG) {

var bodyTemplate = bodyTemplateTypePicker.call(this, pBodyTemplateType);

let template = bodyTemplate.setTitle(pTitle)

.build();

if (pBodyTemplateType != 7) //Text not supported in BodyTemplate7

bodyTemplate.setTextContent(makeRichText(pText1) || null, makeRichText(pText2) || null) //Add text or null

if (pImg)

bodyTemplate.setImage(makeImage(pImg));

if (pBackgroundIMG)

bodyTemplate.setBackgroundImage(makeImage(pBackgroundIMG));

this.response.speak(pOutputSpeech)

.renderTemplate(template)

.shouldEndSession(null); //Keeps session open without pinging user..

this.response.hint(pHint || null, "PlainText");

this.attributes['lastOutputResponse'] = pOutputSpeech;

if (pReprompt)

this.response.listen(pReprompt); // .. but we will ping them if we add a reprompt

this.emit(':responseReady');

}

You'll find one for making list templates in the index.js code too.

Applying Action Links and List Items

The Berry Bash sample uses the ElementSelected event to catch touches on the screen. There are two main visual and touch elements your users can interact with viasample:

1. Action links: These appear blue in body templates (not to be used in list templates), and allow you to highlight certain words. Selecting these sends an event to 'ElementSelected' and based on the token set for the element, you can have your skill respond appropriately.

var actionText1 = '<action value="dictionary_token"><i>' + skillDictionaryName + '</i></action>'; //Selectable text

var actionText2 = '<action value="quiz_token"><i>' + skillQuizName + '</i></action>';

'ElementSelected': function() { //To handle events when the screen is touched

if (this.event.request.token == "dictionary_token")

//do this

else

//do that

}

2. List items: These are similar to action links in that you assign tokens to list items that you create and add to list templates. Based on the list item selected, you can then cater a response based the type of token specified and selected.

To keep the experience voice-first, you can extend the ElementSelected event and add relevant utterances to your interaction model. Check out the version in the Berry Bash sample and the example below:

'ElementSelected': function() {

if (this.event.request.token)

//screen touched; do something

else if (this.event.request.intent.slots.numberValue.value)

//user chose option with voice

Learn More and Get Started

You can find these concepts and more in the Berry Bash sample in the Alexa skill-building cookbook. And be sure to check out the render template documentation for more ways to create engaging Alexa skills for Echo Show and Echo Spot. Reach out directly to me @jamielliottg, post on the forums, or get in touch with questions about this sample skill.

And check out these additional resources to learn more as you build:

- Build Skills for Echo Show and Echo Spot

- Best Practices for Designing Skills with a Screen

- Display Interface Reference

- Test for Screen-Based Interaction Issues in Your Alexa Skill

- Amazon Alexa Voice Design Guide

Build Engaging Skills, Earn Money with Alexa Developer Rewards

Every month, developers can earn money for eligible skills that drive some of the highest customer engagement. Developers can increase their level of skill engagement and potentially earn more by improving their skill, building more skills, and making their skills available in in the US, the UK and Germany. Learn more about our rewards program and start building today.

._CB485330771_.jpg)