Alexa Blogs

In a previous blog article, I explained how to build multimodal Alexa skills for Echo Show and Echo Spot using the Alexa Skills Kit (ASK) Software Development Kit (SDK) for Java. In that article, I demonstrated how to implement body templates using v2 of the SDK. Since then, we announced the Alexa Presentation Language (APL), which is Amazon’s new voice-first design language that allows you to create rich, interactive displays customized for tens of millions Alexa-enabled devices with screens. APL provides more control and flexibility for adding visual elements to your skill than body templates.

If you are new to APL, this blog article provides information on how to get started with APL to build multimodal Alexa skills. Once you know how to build an APL document, you can quickly update your existing multimodal skills in Java using APL by following the steps below.

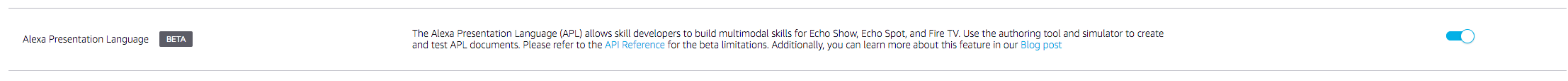

First, make sure to select the APL interface for your skill. Go to your skill in the Alexa Developer Console and click on Interfaces. You will see Alexa Presentation Language as one of the interfaces. Toggle the button next to it and save interfaces.

Alternatively, if you are using SMAPI, you can add the APL interface to your skills.json file.

"interfaces": [

{

"type": "ALEXA_PRESENTATION_APL"

}

]

For a skill implementing a body template, we build the response with .addRenderTemplateDirective(template) and we pass in the body template object as shown in the example below:

@Override

public Optional<Response> handle(HandlerInput input) {

String title = "Title";

String primaryText = "Primary Text";

String secondaryText = "Secondary Text";

String speechText = "Sample Output Speech Text";

String imageUrl = "imageUrl";

Image = getImage(imageUrl);

Template = getBodyTemplate3(title, primaryText, secondaryText, image);

return input.getResponseBuilder()

.withSpeech(speechText)

.withSimpleCard(title, speechText)

.addRenderTemplateDirective(template)

.withReprompt(speechText)

.build();

}

However, for adding APL to our skill, we can remove the card [.withSimpleCard(title, speechText)] and render template [.addRenderTemplateDirective(template)] from the response. Instead, we will add the APL directive as shown below:

@Override

public Optional<Response> handle(HandlerInput input) {

String speechText = "Sample Speech Text";

//Screen Device

if(null!=input.getRequestEnvelope().getContext().getDisplay()) {

try{

ObjectMapper mapper = new ObjectMapper();

TypeReference<HashMap<String, Object>> documentMapType = new TypeReference<HashMap<String, Object>>() {};

Map<String, Object> document = mapper.readValue(new File("document.json"), documentMapType);

TypeReference<HashMap<String, Object>> dataSourceMapType = new TypeReference<HashMap<String, Object>>() {};

Map<String, Object> data = mapper.readValue(new File("data.json"), dataSourceMapType);

return input.getResponseBuilder()

.withSpeech(speechText)

.addDirective(RenderDocumentDirective.builder()

.withDocument(document)

.withDatasources(data)

.build())

.withReprompt(speechText)

.build();

} catch (IOException e) {

throw new AskSdkException("Unable to read or deserialize template data", e);

}

}

//Headless device

return input.getResponseBuilder()

.withSpeech(speechText)

.withReprompt(speechText)

.build();

}

As show above, we add the APL directive in the response by calling the .addDirective() and passing it the RenderDocumentDirective object by building it as:

RenderDocumentDirective.builder()

.withDocument(document)

.withDatasources(data)

.build()

The document and data object are built by unmarshalling the JSON to map object as:

ObjectMapper mapper = new ObjectMapper();

TypeReference<HashMap<String, Object>> documentMapType = new TypeReference<HashMap<String, Object>>() {};

Map<String, Object> document = mapper.readValue(new File("document.json"), documentMapType);

The document is the json from the APL authoring tool.

Note: In the code snippet above, we check if the device is a headless device or a screen device. In case of headless device (such as echo dot) we do not include the APL response. You can include a card in the response for the headless device.

You can find additional instructions on building the APL document here. This blog article explains in detail how to migrate display templates to APL. Once you have an APL document, you can follow the steps above to quickly update your skill to use APL.

Adding APL to your skill will result in a delightful and immersive experience for Alexa customers with screen devices. You will be able to provide customized experience for devices of different sizes, from Echo Spot to Fire TV. To learn more and get started with APL faster, check out this Java sample skill that uses APL.

Related Resources

- How to Get Started with the Alexa Presentation Language to Build Multimodal Alexa Skills

- How to Quickly Update Your Existing Multimodal Alexa Skills with the Alexa Presentation Language

- APL Sample Skill for Java

- 6 Resources for Building Visually Rich, Multimodal Skills

- 10 Tips for Designing Alexa Skills with Visual Responses